Gall's law: A complex system that works is invariably found to have evolved from a simple system that worked. A complex system designed from scratch never works and cannot be patched up to make it work. You have to start over with a working simple system.

We’ve all heard of “KISS” (Keep It Simple Stupid), but in practice, many builders err towards complexity, even when we think we’re keeping things simple. This leads us to build feature-rich products that are ready for scale - but the scale never comes, because we’ve built something that isn’t quite what our users want. I believe this was one of my biggest shortcomings until relatively recently - I operated within the range of “comfortably simple”.

But being comfortably simple may also mean quite comfortably spending more money than you need to comfortably create a product nobody wants. A better approach? Taking the “uncomfortably simple” route instead.

Simplicity is uncomfortable

“Uncomfortably simple” is an approach where we strip away as much functional and technical complication as we possibly can, then strip away some more. What is left can be built and put in front of real potential users to get feedback. Then we do it again, and again.

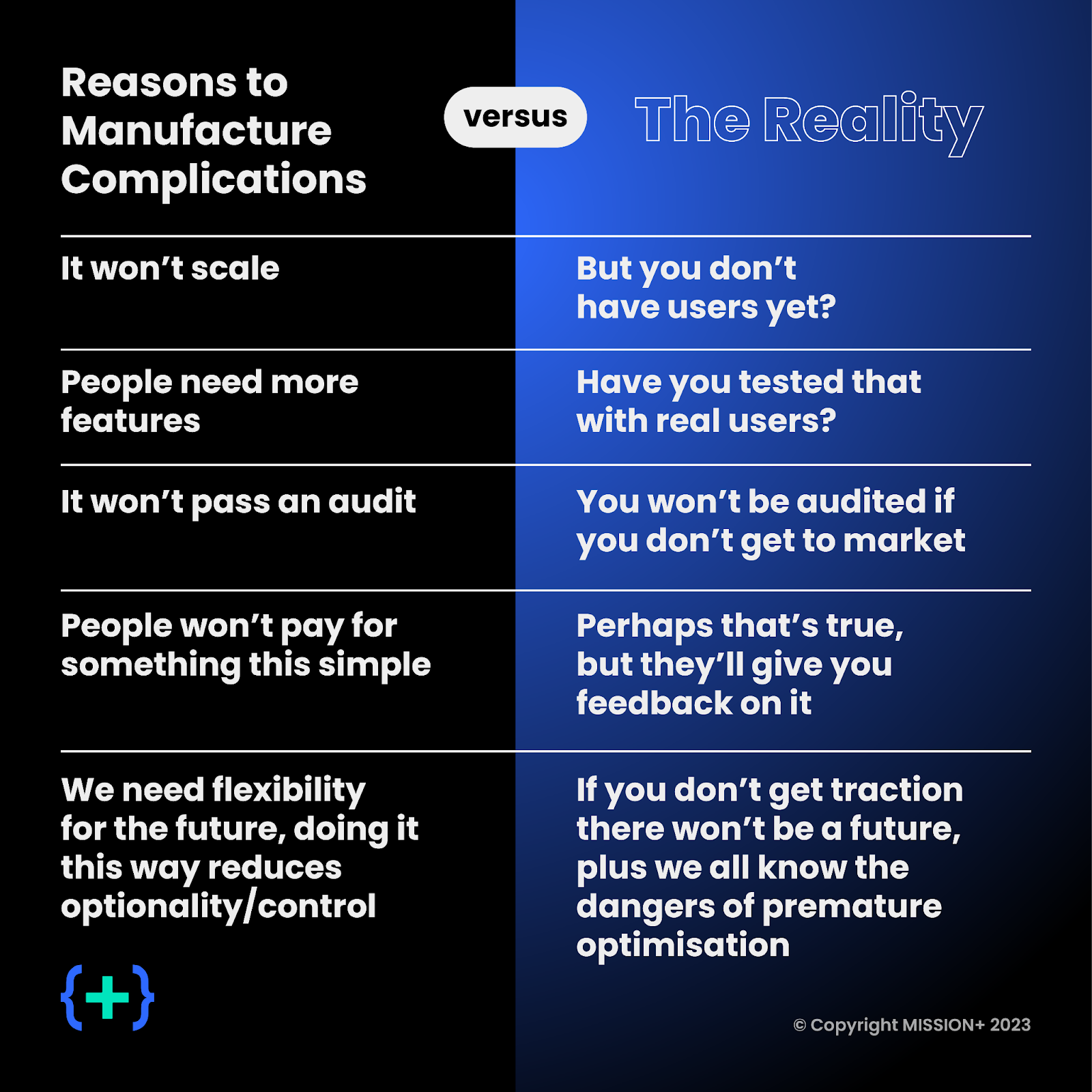

As builders, this level of simplicity is so uncomfortable that when faced with it, the knee-jerk reaction is often to introduce complications under the guise of depth. You may have found yourself responding with some of these assertions:

Complexity is born from iterative simplicity

Essential complexity is born from iterative simplicity. As each simple iteration is completed, tested and accepted, we grow ever closer to our user’s complex desires. This takes some time, but has an ever-compounding impact: We overestimate what we can achieve in the short term, but underestimate what we can achieve in the long term.

Anyone looking at the first version of JavaScript would be unable to fathom the ecosystem available now. Even the most optimistic cell phone owner in the 1990s would not have predicted TikTok. On a smaller scale, look at early versions of “The Facebook” compared to what it became.

So what are some concrete steps we can take to reduce the complexity in our products?

Build vs Buy vs Assemble

Once upon a time, I believe the build vs buy argument held more weight. Applications were mostly closed ecosystems, so interoperability was low. This is no longer the case - API-driven thinking dominates, and so weaving a web of specialist SaaS applications is an opportunity that’s hard to ignore. Therefore, buy until proven otherwise.

A recurring “transformation” theme I talk about is that most companies don’t want, or need, innovation. Companies need to incorporate the state of the art, which I assert is the leading open-source framework of the day. When determining a stack, architects should mostly just adopt the most popular open-source framework, unless they truly have unique problems - which almost no one has. Engineering has become as much about assembling a solution than building one.

Prototyping

The art of the prototype is a product squad’s best friend. The tooling available, such as Figma, Marvel, Bubble, etc has advanced faster than most companies can adopt it. However, many fall into the fallacy of prototyping easy “feel good” flows that don’t test anything but give satisfaction, instead of proving out areas of ambiguity. Yes, you can build a beautiful onboarding journey, with all your shiny corporate colours, but what does that prove? Building a rough prototype that focuses a potential user into giving feedback around an actual business problem is worth 100 times an academically excellent technical solution that proves nothing.

Prototypes are experiments. Experiments ought to fail sometimes, perhaps even the majority of times. Anyone who brags that their prototypes are always successful and adopted in production has missed the point. This approach necessitates experimentation, and therefore cutting corners.

A prototype is not Version One of a piece of software. A prototype should always be thrown away once the hypothesis is proven (or not). Any other case indicates the prototype is over-engineered and overly complex. Anything that does not test the hypothesis should be faked.

When choosing function points to prototype, prioritise based on the most uncertain - but feasible - point, and work backwards. The earlier you get feedback, the more likely you are to build a valuable product.

I cannot prove it, but I imagine Microsoft Clippy could have been prototyped and a lot of effort and heartache saved. Picture how many failed products are out there that we’ve never heard of, that have had billions of dollars poured into building them before the creators realised there was no market. Prototyping saves money and gets you to product-market fit faster.

Scope

The area one can find the most opportunities to simplify is scope. The most important element in software development is the feedback loop and making it as tight as possible. Therefore, reduce the scope of a release to the point of being uncomfortable, but get that reduced scope in front of real people as soon as possible.

For example, if a feature is only going to be used occasionally (especially if you don’t have users yet) such as an account termination or a year-end process, then perhaps it can be descoped and handled manually by an operations team. Having so many users or transactions that this is complicated is a great problem to have. Or perhaps a feature will have multiple journeys - get feedback on the most likely journey first before building the others.

If the features in a release are innovative, or experimental, do a prototype first to get that real feedback. If you don’t know the target users to get feedback from, your venture is unlikely to succeed. Once a prototype has shown that something is interesting to your users, add the most simple version of it to your product and elicit more feedback.

Microservices

While I’m a fan of abstracting business logic into usable components, the movement has gone too far. You don’t need your system to be so complex when you’re starting out! This is probably responsible for more over-engineered early-stage architectures than any other design. Multiple repos, repeated code, shared libraries, complicated build and deployment pipelines - all for “microservice purity”.

Split logic up, but keep them roughly in the same shaped libraries/repos as the teams. Therefore, if you have one team, you ought to have one repo and set of libraries. With good unit testing and automated deployment, splitting that up in the future is not as impossible as it may seem.

This approach brings to mind Conway’s Law: Any organisation that designs a system (defined broadly) will produce a design whose structure is a copy of the organisation's communication structure. This law can sometimes work backwards - if you create an overly complex set of services early on, you’re probably going to have to organise your team’s communication structure around it. It’s probably not worth it.

Compliance

This is a delicate area, especially in regulated businesses like financial services, and I plan to write more about it. To summarise my views:

- There is no such thing as a no-risk company. A no-risk company would never get off the ground.

- Understand the tech risk guidelines, and take a risk-balanced view (especially until you have clients & scale).

- Understand data hygiene and safeguard customer data

- Ensure you don’t accept money from or send money to bad guys

- Don’t concentrate on risk processes at the expense of the results

Rogue employees are a real problem, but you have to trust your team, plus there are laws and repercussions for criminal behaviour. If your processes are overly weighted towards preventing bad employees from causing damage (negative change), you have probably also restricted your lawful employees from facilitating improvements (positive change). The key is balance.

Regulators welcome and encourage innovation and are mostly reasonable for early-stage companies. As long as you have identified risks, and have plans to mitigate them, you’re mostly in good shape (but don’t lose client data!).

That said, if you are lucky and you start getting real users on the system, be prepared to invest resources for the ever-increasing compliance overhead. I don’t necessarily think a lot of this overhead truly reduces risk (one for a future article), but like it or not it will come, so budget for it.

If you don’t have clients (or worse you don’t have a company because you ran out of money), you probably don’t have risk. Keep this principle in mind when making decisions.

Performance

The same engineer who talks about premature optimisation being the root of all evil then goes on to worry if their database will be fast enough to load under pressure, and adds a caching layer. Weird bugs are found with just ten users - oops there was a bug in the caching logic, and we just turned off a potential paying customer.

Performance concerns (rightly) haunt all software engineers but don’t worry too much until you have real usage metrics, you’ll probably focus on the wrong area anyway. Keep it simple, stress test regularly, and fix the bits that fall over. That way you know you’re focusing effort on actual problems, and not anticipating issues while introducing unnecessary complexity as a side effect.

Invest in what will make iteration easier

After spending all this time talking about things to simplify or fake, what are the parts of the system that are worth investing more time?

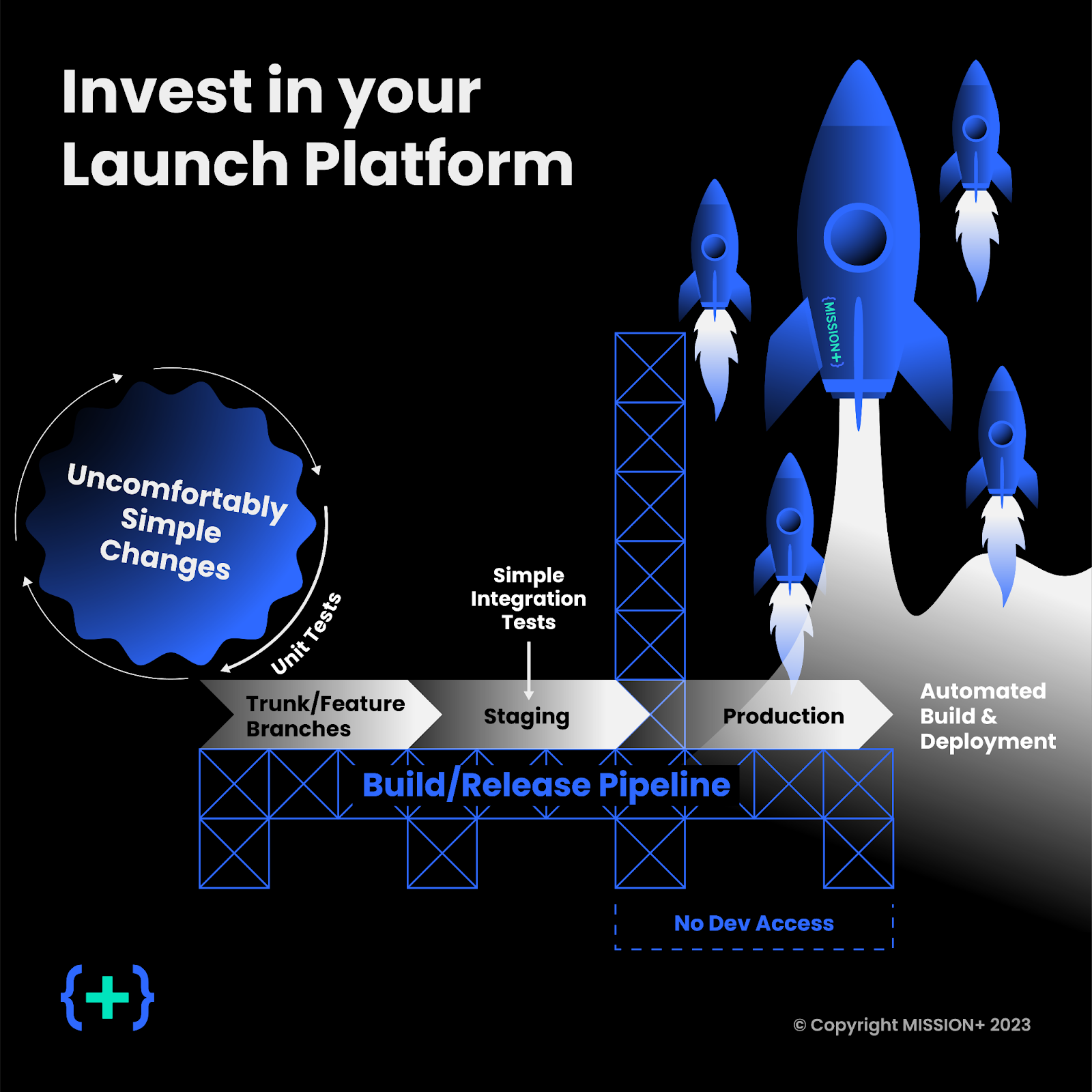

First, is a well-integrated build/release pipeline. All changes that move between environments should be driven by this pipeline, and it should include automated integration tests. Getting this right early allows for:

- Visibility into the state of the production environment (as changes/config must be source-controlled, no environment mismatch).

- Helps with the compliance piece; if done well it means that developers don’t need write access to production, plus everything is logged.

- Facilitates rapid deployment to production, allowing for iterative refactoring of the environment.

Second, is automated unit testing. You don’t really understand your code until you have unit tests in place, and so every modification is a roll of the dice. Unit tests give you confidence in your changes. Some readers may be surprised to see this and assume this is a given in modern software. It is my observation that the majority of software engineers still don’t write unit tests. Michael C Feathers (Working Effectively with Legacy Code) defines legacy code as code without automated unit tests - meaning that many teams are writing legacy code right now.

By having a strong, automated testing and release platform, product teams can quickly respond to both functional and non-functional issues. New versions of the application can be quickly tested and deployed, shortening the feedback cycle.

There is an Elon Musk interview that I have long lost, where he talks about SpaceX having two products: the rockets themselves, and the launch platforms. As you can see, my recommendation is to keep the rocket simple, but invest in the launch platform. That way, you can test a whole host of rockets in the shortest time, and find the one that gets you to Mars.

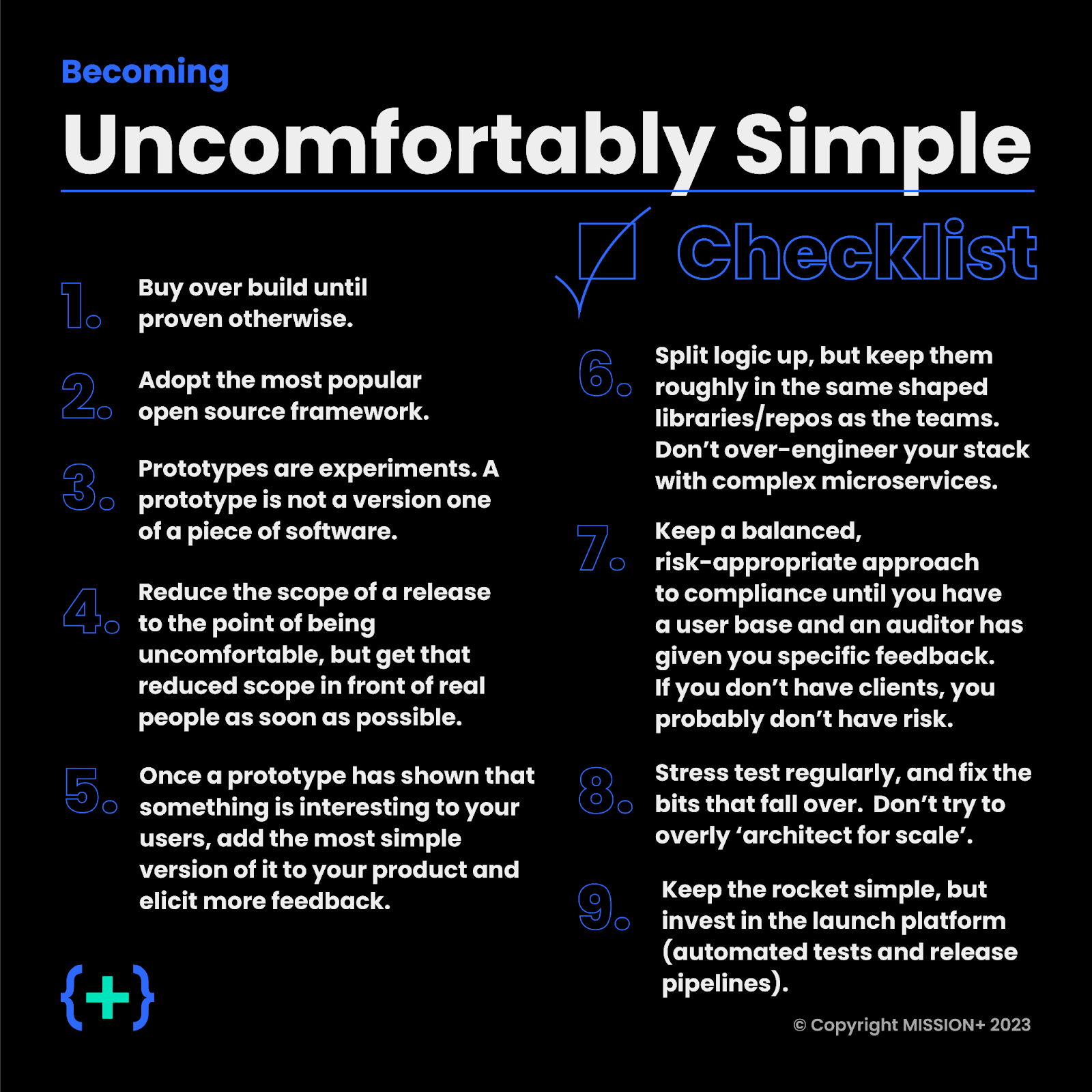

If you take away nothing else… here’s a checklist to introduce an uncomfortably simple culture into the way you build technology:

.jpg)