It seems we are hitting the Generative Artificial Intelligence Trough of Disillusionment. Barely a day goes by without reading an article about how Gartner Predicts 30% of Generative AI Projects Will Be Abandoned After Proof of Concept By End of 2025 or the like.

What’s the problem? Gen AI doesn’t live up to the promise? There are Proof of Concepts (PoCs) everywhere; did they fail? Not directly - the issue is a misunderstanding of how AI can be adopted, the challenges it introduces, and the difficulties of getting something new into a production environment. Perhaps these PoCs are not proving Concepts, but rather proving Complexity!

Organisations have business functions, supported by technologists. But they also have compliance, security & governance functions which, particularly in regulated environments, have the important job of making sure everything is aligned with regulation & best practice.

Like the human body, these functions act like white blood cells - rushing out to attack an invading foreign object. This is an essential activity; critical business activities must operate safely and without disturbance from unproven ideas. They must be convinced that the new technology is for the benefit of the host, and not to be destroyed. Many PoCs skipped this step, but it can only be ignored for so long.

The architecture function of an enterprise helps define standards and rules, preventing fragmentation and duplication within the technology ecosystem.

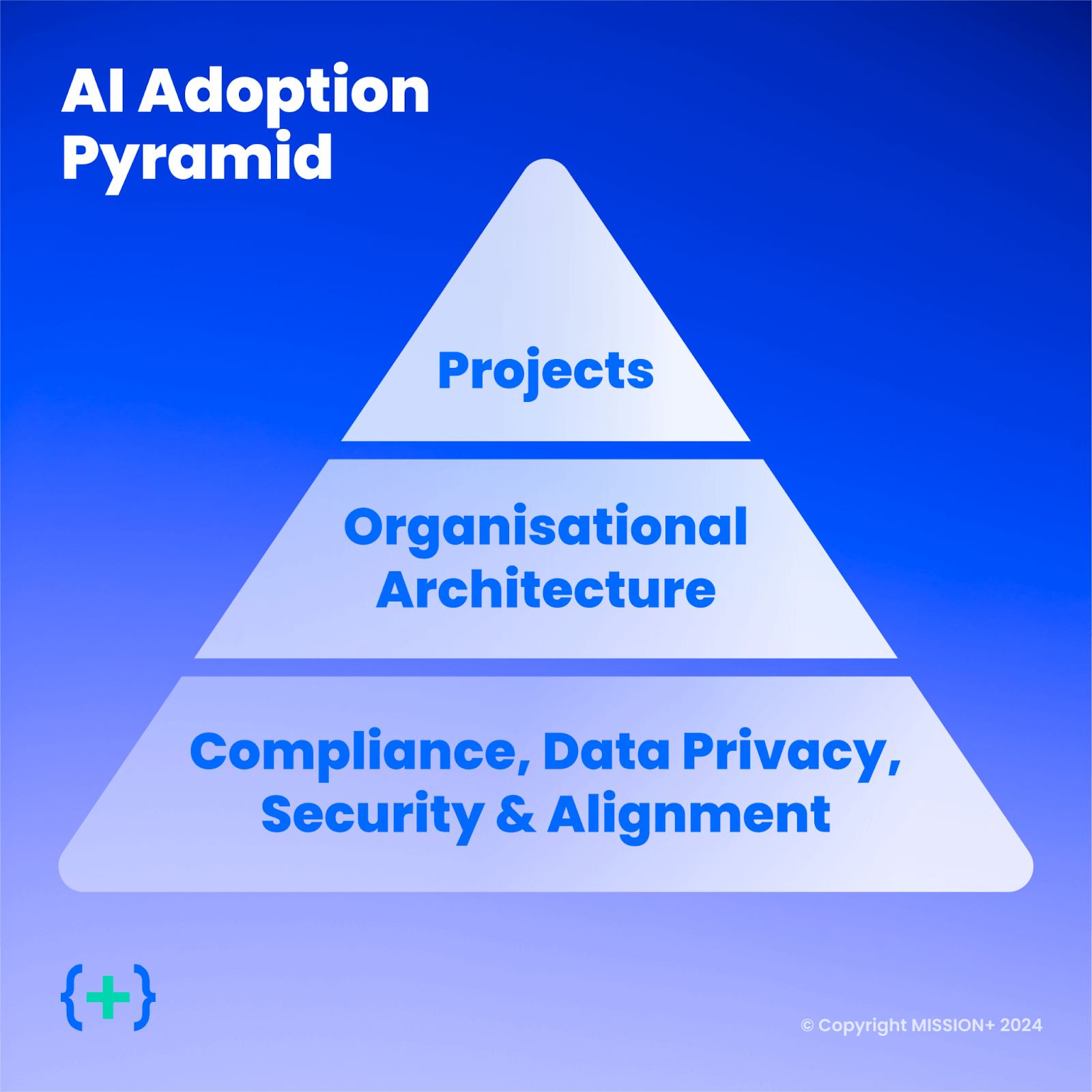

Each layer of this pyramid builds upon the one below it, and is a necessary foundation upon which to build. We can jump to a PoC, but that is jumping to the top of the pyramid; when it comes to productionisation, the lack of alignment in the lower levels prevents us from moving forward.

Compliance, Data Privacy, Security & Alignment

The base of the pyramid is Compliance, Data Privacy, Security & Alignment. This is the layer that works with the White Blood Cells, gently guiding the immune system to a response that isn’t outright rejection.

Generative AI models and tools are often not self-hosted, and so there are risks - and potentially legal requirements - in terms of what data is sent where. Furthermore, entirely new risks and concerns may arise: for example, what does the organisation do when their chatbot gives misleading information, or a model’s hallucination could impact an individual’s health or livelihood?

At a minimum, policies:

- Need to be updated to reflect what is and isn’t allowed in terms of models used and data transferred

- Need to include information around what productivity tools are allowed

- Need to be created to handle the new situations that may occur

There are parallels to cloud adoption in regulated organisations. Some developers were frustrated that they couldn’t get their companies to move to the cloud as quickly as a startup, but the AI Adoption Pyramid is the same. If you were involved, how long did the cloud journey take at your organisation? 8 or more years? Think of the immense amount of work AWS and others have put into education through things like the AWS Compliance Center before risk & compliance was aligned.

Organisational Architecture

The next layer in the pyramid is Organisational Architecture. First up in this layer is organisational hierarchy. Specific named individuals need to be given the responsibility for AI-adoption and success. They will spread awareness of the policies and potential for AI within the company.

One approach to this could be an “AI Center of Excellence”, but I would encourage people to read Forrest Brazeal’s excellent article Why “central cloud teams” fail (and how to save yours) first. There are many lessons learnt from failed Cloud Centres of Excellence which are applicable here.

Once you have aligned what is and isn’t allowed from a risk & regulatory perspective and the organisational hierarchy has been implemented, you have the following decisions to make:

- Which LLMs are allowed?

- Which tools will be used for security testing, model performance evaluations, guardrails, etc?

- Which productivity tools will be permitted for each department?

- What processes will be implemented to securely connect models to data, and ensure consumers of the model output are allowed to see that data?

Someone within the organisation needs to be given the responsibility to create and maintain the whitelist of allowed models, tools and frameworks.

There are also parallels to the adoption of modern data processes and organisational integration. Once upon a time data was part of the technology department - which made sense when data existed for an application to function. But once the data was operated upon by humans not applications, it got pulled out. Many organisations have a Data & Analytics group which is aligned independently to technology.

In this sense, is AI different to Analytics? Traditional AI probably not, but Generative AI yes. Content creation, productivity & coding tools, personalisation, and automation across all LOBs - including all associated policies & governance - is a big deal. It warrants its own place within the organisational hierarchy.

Projects

Once the policies are in place, and the organisation aligned, it’s time to choose the projects. A lot of Proof of Concepts have chosen going after repetitive operations tasks for automation, which might make sense, but not necessarily so.

Let’s say your AI Automation process is estimated at 99% correct. If you run it 1000 times a day, that still means 10 failures. That’s probably an unacceptable number, and 99% correct is probably overly optimistic right now.

Detecting the errors is going to be hard. Cory Doctorow lays this out beautifully in "Humans in the loop" must detect the hardest-to-spot errors, at superhuman speed - the essential idea is that hallucinations will be subtle, statistically plausible errors, increasingly indistinguishable from truth.

“Centaur chess” is a term popularised by Garry Kasparov in the early 2000s to describe a chess player aided by an AI assistant. The centaur player would typically be able to beat either a human, or a pure AI. Importantly, the human drove the process, with the AI feeding real-time information and options to improve their decision making.

Doctorow’s Reverse Centaurs are the opposite - AIs driving the decision making process, but humans checking their homework. A tough job if the mistakes are - by definition - statistically plausible. In the future I foresee us using multiple different models that check each other to try and work around this issue.

If the value of the cost savings outweighs having a human check the result every time, it’s a good candidate for a project. This probably means lower frequency, higher value problems - the opposite of where many PoCs are currently being attempted!

.png)

When selecting projects also take into account that without high quality input data, it is next to impossible to get high quality output. Even the simplest example - putting a chatbot on top of internal documents - will fail if your internal documents are out of date or incomplete. Now extrapolate that out to a mission critical process.

Building The Team

Building and aligning the AI Adoption Pyramid will take a lot of effort, and multiple dedicated resources. Many companies have started trying to hire “rockstar Machine Learning engineers” to fill the gap. There are several challenges with this:

- There aren’t many “rockstars” out there looking for jobs.

- The ones that are probably don’t want to work for you unless you’re an extremely well-funded AI laboratory doing fundamental research for your products.

- Your problems probably don’t need much deep AI/ML understanding, but rather specific domain and organisational knowledge, along with a keen appreciation of how to use existing foundational models properly.

You can’t hire your way out of this. You have to train and upskill your existing team. Bringing in a partner, plus taking advantage of the huge amounts of material online is a great starting point for this. Plus the age old advice for getting started on any complicated endeavour: JFDI!

Conclusion

So don’t just kick off a bunch of projects and then wonder why they didn’t get you to where you hoped they would be. Start thinking more deeply about the AI Adoption Pyramid:

- Align the security, risk & compliance teams, and build out the policies that will guide implementation.

- Empower members of the organisation to make architectural decisions around tools & models that align with those policies, and then evangelise the benefits.

- Choose appropriate projects that will bring benefits, without falling into the risk of hallucination-based failures.

- Along the way, train your team and bring in partners who can help implement best practice frameworks and share some of their experience.

The potential of Gen AI blows my mind, and I haven’t been this excited about the future since I started working. It’s already changed the way I operate & think, and the models will only improve from here. Good luck with your projects, and let’s enjoy all the advances that are coming!

.jpg)

.jpg)